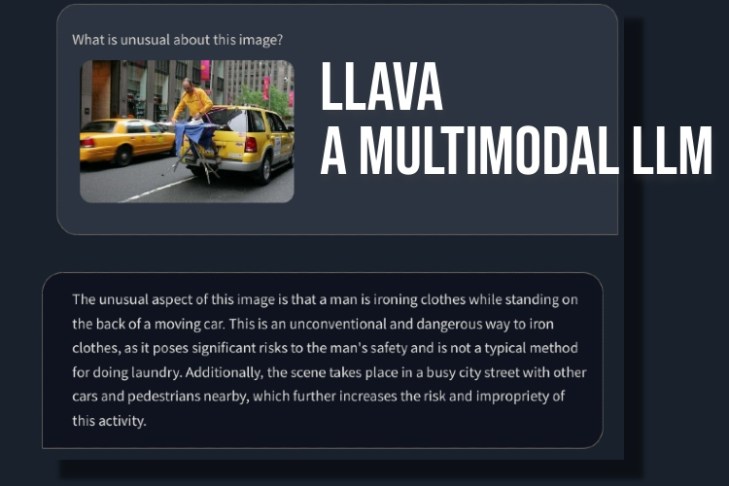

Computer scientists from various universities recently launched LLaVA, an open-source multimodal LLM, which I came across on Twitter last week. Similar to GPT-4, LLaVA handles both text and image inputs. It combines a general-purpose LLM with an image encoder to create a Large Language and Vision Assistant model. Intrigued by its touted features, I decided to test LLaVA to gauge its accuracy and reliability, particularly in comparison to GPT-4’s upcoming multimodal model. Let’s delve into my experience with LLaVA.

Understanding LLaVA: A Multimodal Language Model

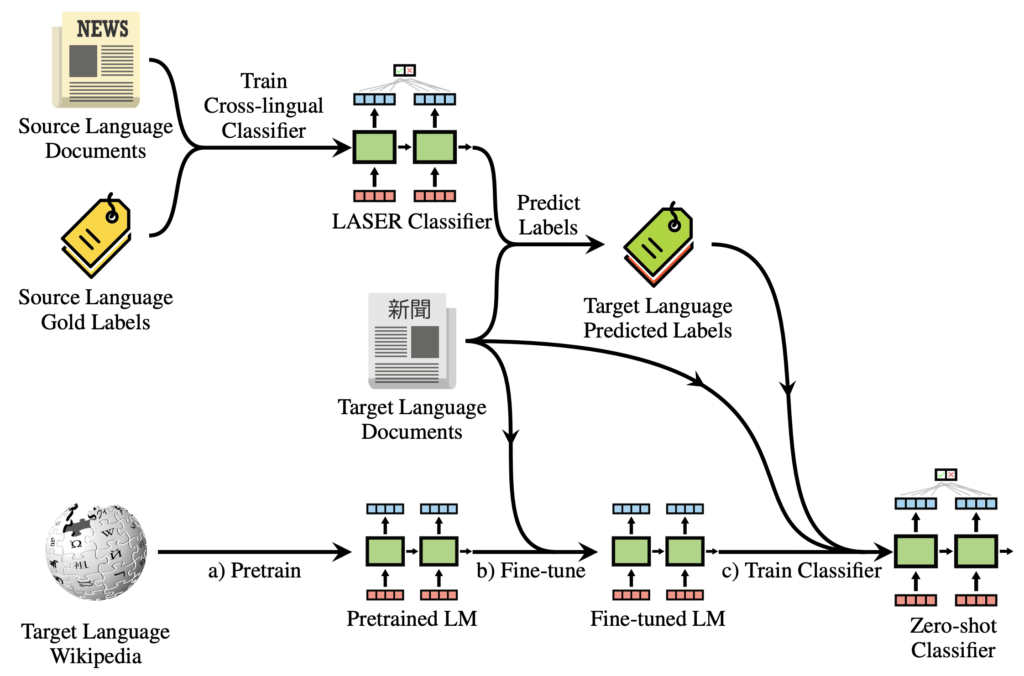

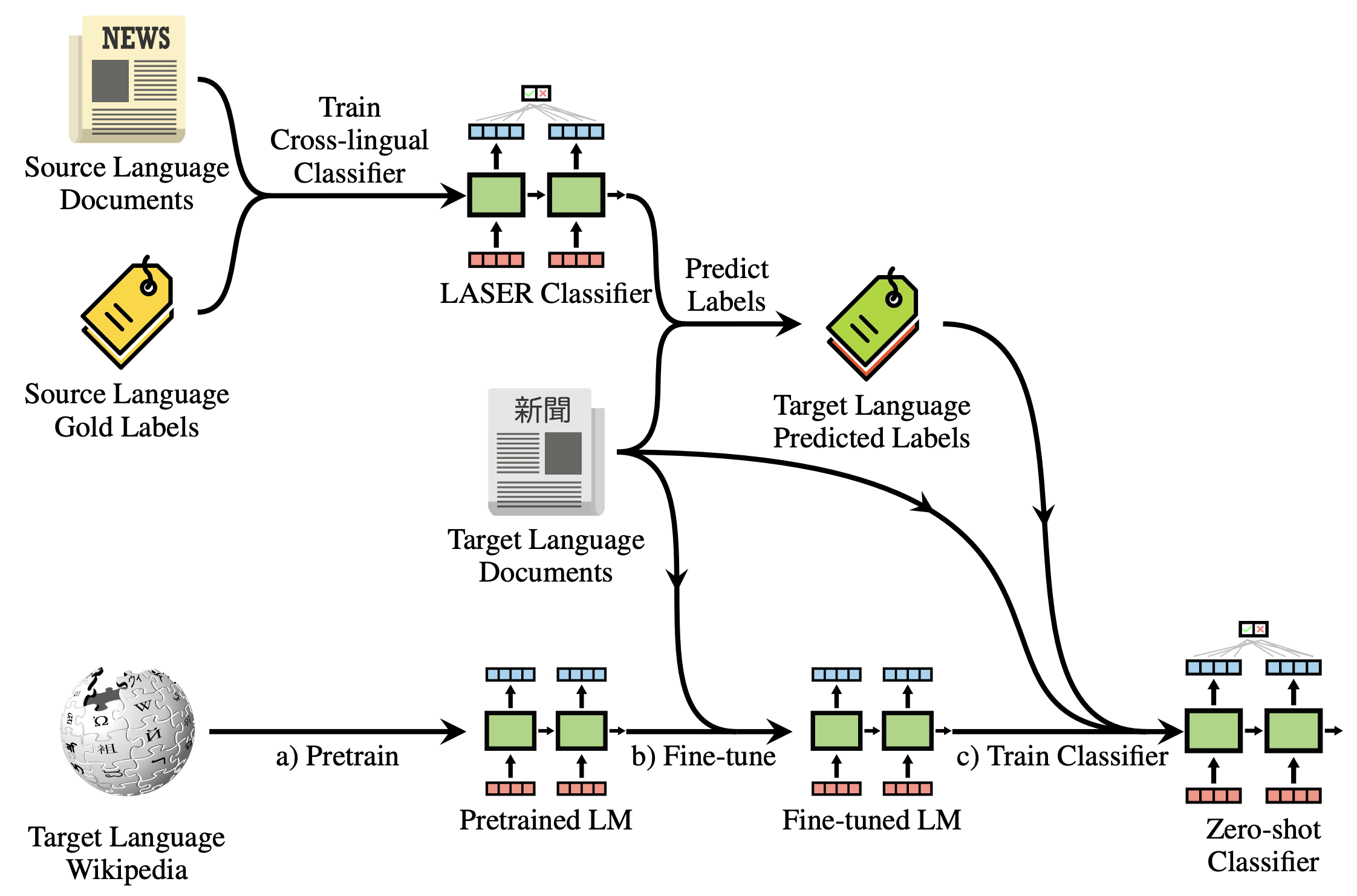

LLaVA, a multimodal LLM akin to OpenAI’s GPT-4, integrates text and image processing. Unlike GPT-4, LLaVA possesses image processing capabilities thanks to a vision encoder infusion.

Engineered by computer scientists from the University of Wisconsin-Madison, Microsoft Research, and Columbia University, this project showcases the functionality of a multimodal model and evaluates its performance against GPT-4.

Vicuna serves as the large language model (LLM), while CLIP ViT-L/14 functions as the visual encoder, developed by OpenAI. The project has produced high-quality multimodal instruction-following data with GPT-4, resulting in excellent performance, achieving 92.53% in the ScienceQA benchmark.

Additionally, it’s fine-tuned for general-purpose visual chat and reasoning datasets, particularly from the science domain. Overall, LLaVA represents the beginning of a new multimodal reality, and I was excited to test it.

Using LLaVA’s Vision Assistant

1. To utilize LLaVA, visit llava.hliu.cc and explore the demo, powered by the LLaVA-13B-v1 model.

2. Add an image in the top-left corner and select “Crop“. Ensure square images for the best output.

3. Now, add your question at the bottom and hit “Submit”. The LLM will then study the image and explain everything. You can also ask follow-up questions about the image.

Multimodal LLM with Visual Capabilities: First Impressions

To check LLaVA’s vision capability, we started with basic examples. We uploaded a painting and asked LLaVA to identify the painting; it correctly answered. I also asked follow-up questions, and it did well.

For instance, I uploaded an image of food items and posed questions about breakfast options and the total calorie intake. It correctly identified each item, provided food recipes, and estimated the calorie count. Although the recipes lacked detail, the multimodal LLM suggested incorporating the three food items into a dish or meal.

Subsequently, I included an image with a handwritten note requesting a Python script for the Bubble sort algorithm. However, it failed to recognize the text on paper and couldn’t execute the code. Thus, I presented a simple mathematical question asking for the value of x, yet it still provided an incorrect answer.

To delve deeper, I added another mathematical question, yet it wasn’t handwritten for clarity. It seems my handwriting wasn’t the issue; rather, the AI simply generated an incorrect equation. It appears it doesn’t utilize OCR, instead analyzing pixels and comparing them with ImageNet models from CLIP. In tackling math problems, whether handwritten or typed, the LLaVA model struggled.

Continuing, I requested an explanation for a New Yorker cartoon’s humor, but it failed to grasp the comedic element, merely describing the scene. It only comprehended the assignment when I highlighted the gender aspect, resulting in a correct response.

Finally, I tasked LLaVA with analyzing a medical report, yet it consistently misinterpreted and provided inaccurate summaries. Despite multiple attempts, it failed to extract relevant data from the uploaded image.

The Need for Improvement in LLaVA

In summary, it is premature, particularly in the realm of open-source, to develop a proficient multimodal LLM. Without a robust foundational language-visual model, the open-source community may lag behind proprietary alternatives. While Meta has released several open-source models, it has not provided any visual models for community development, excluding Segment Anything, which is irrelevant in this context.

Google released PaLM-E, an embodied multimodal language model in March 2023, while OpenAI demonstrated GPT-4’s multimodal capabilities during the launch. When asked about an image where a VGA connector is plugged into a phone’s charging port, GPT-4 highlighted the absurdity with clinical precision. In another demonstration during the GPT-4 developer stream, OpenAI’s multimodal model swiftly created a fully-functional website after analyzing a handwritten note.

Simply put, based on our tests on LLaVA, it will take much longer for Google to catch up with OpenAI in the language-visual space. With further progress, development, and innovation, improvements are expected. However, currently, we are eagerly awaiting the opportunity to test GPT-4’s multimodal capabilities.

Pritam Chopra is a seasoned IT professional and a passionate blogger hailing from the dynamic realm of technology. With an insatiable curiosity for all things tech-related, Pritam has dedicated himself to exploring and unraveling the intricacies of the digital world.